The CloudFlare Worker is a Serverless Service (similar to Amazon Lambda, Google Function) that we can use to deploy functions in the edge network. The serverless is the future - with the biggest advantages: no need to maintain the servers (no devop costs) - and highly scalable, as the serverless functions can be automatically scaled horizontally to the nodes. And also, the latency is small because the node will be close to the visitors geographically.

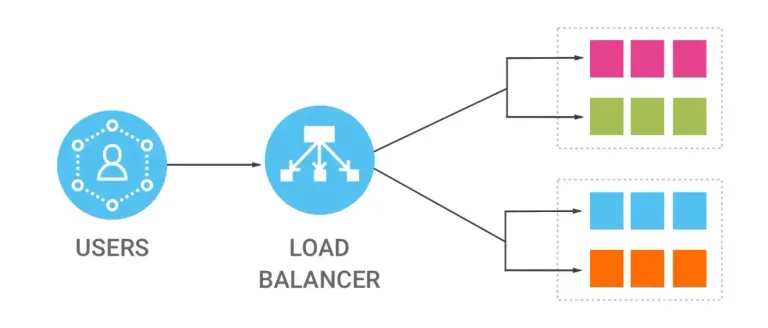

A Load Balancer is a server that can be used to distribute the load across the servers. However, a load balancer can still suffer from Single-Point-Failure. With CloudFlare Worker, the worker node is distributed in the edge networks.

Deploying the Load Balancer using a serverless techonology on the distribute network nodes thus can be quite advantageous.

Distributed Load Balancer by CloudFlare Worker

The cost of seting up a distribute load balancer is affordable. The CloudFlare worker has a free plan - which gives you 100K API calls per day and 10ms maximum CPU time for each API request. For paid plan - the monthly quote is 10 Million requests and maximum 50ms CPU time.

For example, let's first define a list of servers behind the distributed load balancer.

let nodes = [

"https://api.justyy.com",

"https://api.steemyy.com"

];

We also need to implement the Promise.any as it is not supported on the worker nodes in the Cloudflare Worker. The Promise.any will return the first promise that is fulfilled.

function reverse(promise) {

return new Promise((resolve, reject) => Promise.resolve(promise).then(reject, resolve));

}

function promiseAny(iterable) {

return reverse(Promise.all([...iterable].map(reverse)));

};

On the other hand, the Promise.race will return the first promise that is either fulfilled or rejected. Here, we need Promise.any as we need to get the first (fastest) server. Following is a function to send an API to the server and return the server name:

async function contactServer(server) {

return new Promise((resolve, reject) => {

fetch(server, {

method: "GET"

}).then(response => {

resolve({

"server": server,

});

}).catch(function(error) {

reject(error);

});

});

}

We can improve the serverless load balancer by contacting server and getting more information such as the load average of the API server - and then choose the least load one.

Handling the CORS and Headers

The entry of a Cloudflare Worker should be handling the CORS and Options.

function handleOptions(request) {

// Make sure the necesssary headers are present

// for this to be a valid pre-flight request

if (

request.headers.get('Origin') !== null &&

request.headers.get('Access-Control-Request-Method') !== null &&

request.headers.get('Access-Control-Request-Headers') !== null

) {

// Handle CORS pre-flight request.

// If you want to check the requested method + headers

// you can do that here.

return new Response(null, {

headers: corsHeaders,

})

} else {

// Handle standard OPTIONS request.

// If you want to allow other HTTP Methods, you can do that here.

return new Response(null, {

headers: {

Allow: 'GET, HEAD, POST, OPTIONS',

},

})

}

}

addEventListener('fetch', event => {

const request = event.request;

const method = request.method.toUpperCase();

if (method === 'OPTIONS') {

// Handle CORS preflight requests

event.respondWith(handleOptions(request))

} else if (

method === 'GET' ||

method === 'HEAD' ||

method === 'POST'

) {

// Handle requests to the API server

event.respondWith(handleRequest(request))

} else {

event.respondWith(

new Response(null, {

status: 405,

statusText: 'Method Not Allowed',

}),

)

}

});

Forwarding Requests

Once we know which (fastest) server should serve the current request. Then we need to forward the request to the orign server and once we have the result - forward it back to the users. The following are two functions to forward the GET and POST requests respectively - you might want to add other requests such as PUT, PATCH,DELETE etc.

async function forwardRequestGET(apiURL) {

return new Promise((resolve, reject) => {

fetch(apiURL, {

method: "GET",

headers: {

'Content-Type': 'application/json'

},

redirect: "follow"

}).then(response => {

resolve(response.text());

}).catch(function(error) {

reject(error);

});

});

}

async function forwardRequestPOST(apiURL, body) {

return new Promise((resolve, reject) => {

fetch(apiURL, {

method: "POST",

redirect: "follow",

headers: {

'Content-Type': 'application/json'

},

body: body

}).then(response => {

resolve(response.text());

}).catch(function(error) {

reject(error);

});

});

}

Load Balancer Implementation using CloudFlare Worker

Finally, below is the main implementation of the load balancer by CloudFlare worker script - which is to run on the distributed edge networks.

/**

* Respond to the request

* @param {Request} request

*/

async function handleRequest(request) {

const country = request.headers.get('cf-ipcountry');

const servers = [];

for (const server of nodes) {

servers.push(contactServer(server));

}

const load = await promiseAny(servers);

const forwardedURL = load['server'];

const method = request.method.toUpperCase();

let result;

let res;

let version = "";

try {

version = await getVersion(load['server']);

} catch (e) {

version = JSON.stringify(e);

}

try {

if (method === "POST") {

const body = await request.text();

result = await forwardRequestPOST(forwardedURL, body);

} else if (method === "GET") {

result = await forwardRequestGET(forwardedURL);

} else {

res = new Response(null, {

status: 405,

statusText: 'Method Not Allowed',

});

res.headers.set('Access-Control-Allow-Origin', '*');

res.headers.set('Cache-Control', 'max-age=3600');

res.headers.set("Origin", load['server']);

res.headers.set("Country", country);

return res;

}

res = new Response(result, {status: 200});

res.headers.set('Content-Type', 'application/json');

res.headers.set('Access-Control-Allow-Origin', '*');

res.headers.set('Cache-Control', 'max-age=3');

res.headers.set("Origin", load['server']);

res.headers.set("Version", version);

res.headers.set("Country", country);

} catch (e) {

res = new Response(JSON.stringify(result), {status: 500});

res.headers.set('Content-Type', 'application/json');

res.headers.set('Access-Control-Allow-Origin', '*');

res.headers.set('Cache-Control', 'max-age=3');

res.headers.set("Origin", load['server']);

res.headers.set("Version", version);

res.headers.set("Country", country);

res.headers.set("Error", JSON.stringify(e));

}

return res;

}

Please note that the load balancer can add custom headers before returning the requests - and we here add the version header that is obtained via a separate API call to the origin server:

async function getVersion(server) {

return new Promise((resolve, reject) => {

fetch(server, {

method: "POST",

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify({"id":0,"jsonrpc":"2.0","method":"call","params":["login_api","get_version",[]]})

}).then(response => {

resolve(response.text());

}).catch(function(error) {

reject(error);

});

});

}

By implementing such distributed Load Balancer node, we improve the availability and durability - at a low cost - as we do not actually need to maintain (monitor, upgrade and security patches) a server because of the serverless technology. At the meantime, we are forwarding the requests to the 'fastest' origin servers via the CloudFlare worker - which is close to the users (low latency) georgraphically.

--EOF (The Ultimate Computing & Technology Blog) --

Reposted to Blog

Every little helps! I hope this helps!

Steem/Blurt On!~

If you like my work, please consider voting for me or Buy Me a Coffee, thanks!

https://steemit.com/~witnesses type in justyy and click VOTE

Alternatively, you could proxy to me if you are too lazy to vote!

Also: you can vote me at the tool I made: https://steemyy.com/witness-voting/?witness=justyy