Music lovers, yesterday I discussed mixing mistakes by music engineering beginner, one of them is not balancing first. So tonight I will discuss how to balance musical instruments in general.

IMG: Not Balancing (Screenshot of my article)

As listeners, there are two ways we enjoy music, live and recorded. In order for the music to be beautiful to hear live and recorded, engineering music must be able to process (balancing and mixing) both, because both processes (live and recording) are carried out in different ways.

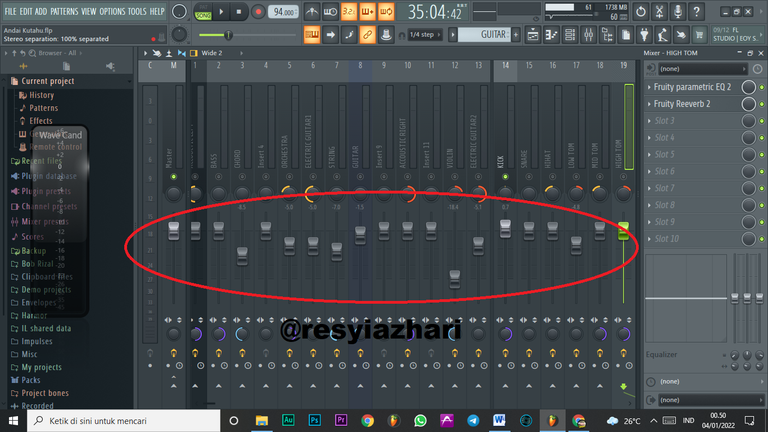

Balancing is an absolute thing that is done by music engineering because the sound of the music that we do on each instrument is not lame so that it is pleasant to hear. Balancing also adjusts the sound level that is balanced according to the genre of the song being worked on, as well as in determining the distance and proximity of the instrument position and Visualizing the position of the instrument is also done by balancing although the process is called panning.

Imagine if 20 musical instruments are played simultaneously, but must be arranged in such a way that all these musical instruments must sound and create good harmonization. Do not let the sound of a musical instrument is not heard because it is covered by the sound of other musical instruments, nor do all the sounds of the instrument gather in the middle. And not all of these instruments have the same sound level.

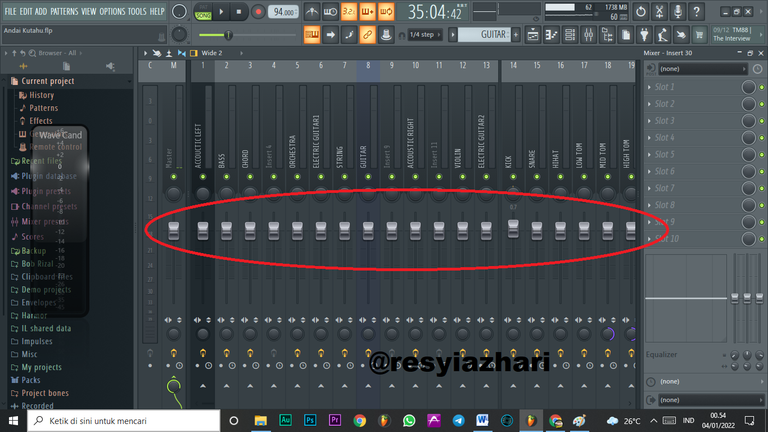

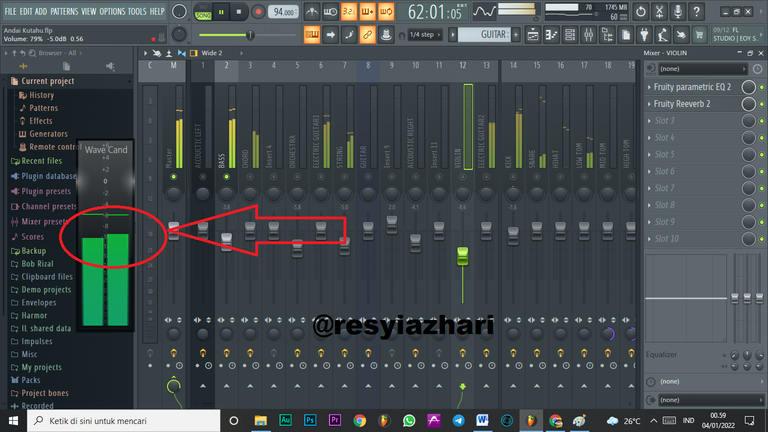

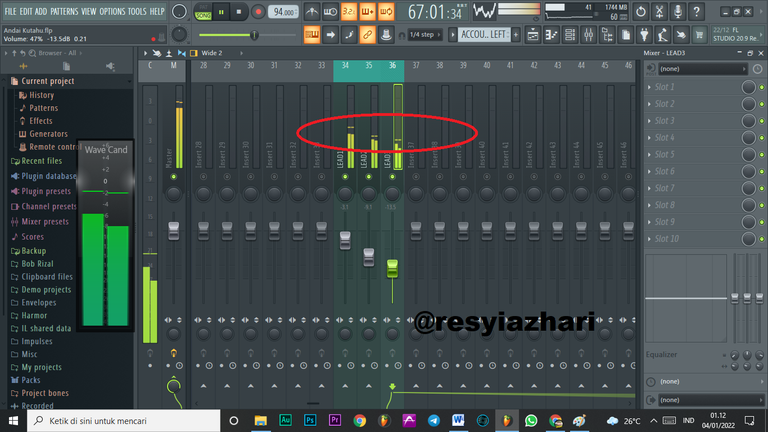

IMG: Before Balancing (Screenshot of the app I'm working on)

IMG: After Balancing (Screenshot of the app I'm working on)

Before carrying out the balancing process, a music engineer must pay attention to the following important things, namely:

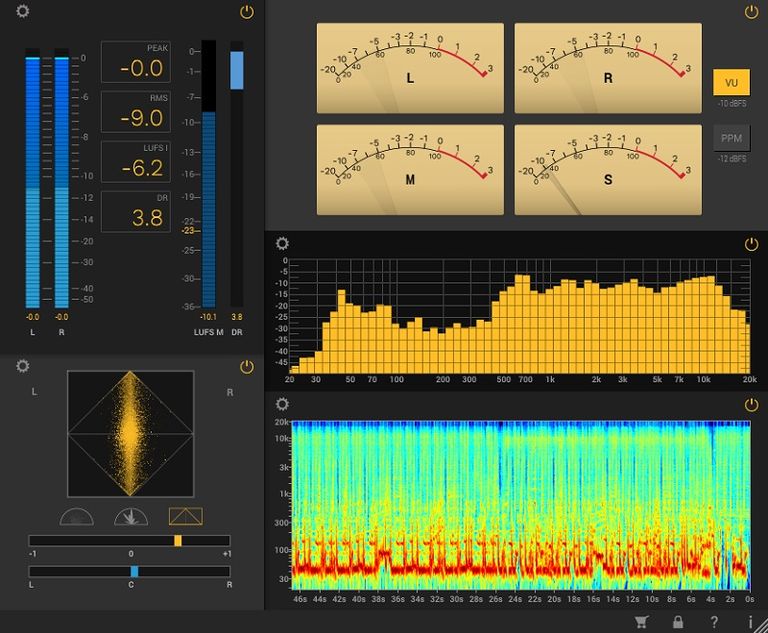

1. Understand monitoring tools

Even though music engineering performs monitoring on a flat monitor, it does not guarantee that the device is completely flat. Because in principle none of the monitors is completely flat, there are still excessive frequencies. For that, a music engineer must really understand monitoring tools. By understanding that his monitor has advantages/disadvantages on one frequency, he will be able to position his hearing well.

2. Understand the studio room

The studio space also contributes to the sound we hear from monitoring devices. The reflection from the wall to the ear at different times. Usually, the reflection reaches the ear in less than 50 ms, so it is as if we hear the original sound from the monitor. The solution we have to do the acoustic treatment so that the room does not cause the slightest reflection. We can also use the services of people who are experts in this field. Next, if your monitor has a control room available, then set it up properly. The setting method is:

a. Listen to music and memorize the lows and highs of your room.

B. Listen to music in at least 2 rooms and with 2 different speakers.

C. Set the low and high frequencies close to the sound of two rooms and two different speakers. Thus, the sound you hear is relatively the same between the studio room and other rooms.

3. When comparing results, keep the levels the same.

Apart from changing the character of the voice, the plugin will also change the sound level. Don't let the audio get any better, but simply because the sound level is louder. From this comparison, the level between after processing and before processing must have the same level and the results after processing must be better than before.

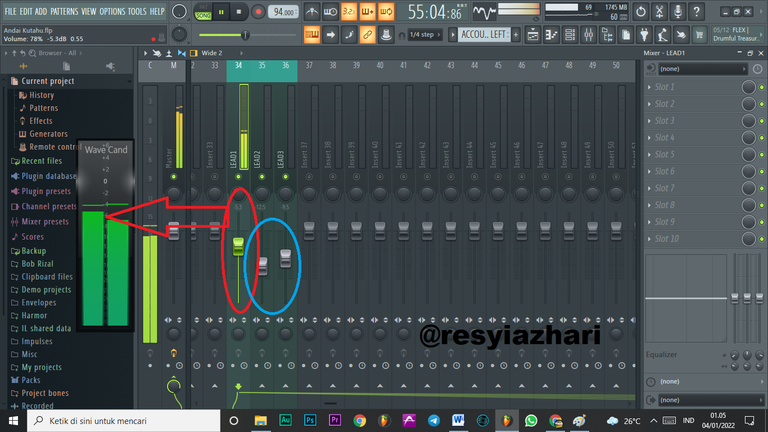

Once monitoring tools like your speakers and headphones make sure they are as expected, you can use a metering plugin to monitor sound levels. There are a variety of plugins available as meters, from paid to free, for just one DAW or support for multiple DAWs. With this plugin, it will be clear the size produced by a sound.

IMG: Wavecandy FL Studio (Screenshot of the app I'm working on)

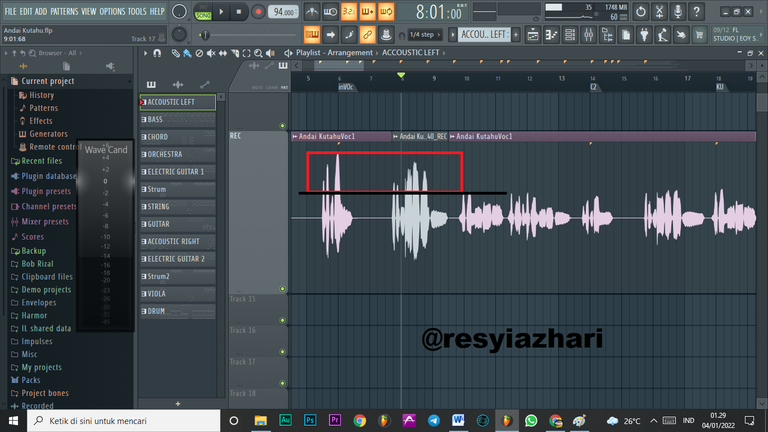

Balancing doesn't have to involve two or more instruments. An instrument must be balanced against itself. For example, sometimes a vocalist produces a different sound level, while what is needed is a voice with the same level, we can balance the vocals first before compressing them with a compressor. But with a note not to damage the desired character. In contrast, if the vocals are balanced with other instruments, there may be a requirement to change the sound level.

IMG: Wave Vocal (Screenshot of the app I'm working on)

If there are the same instruments and played simultaneously (layering) or not, the music technique must first determine which instrument will be the top lead. Once we determine the top leaders, then we will know what to dominate in the instrument. Here, I am giving an example in FL Studio, I used wave candy. Look at the following picture:

IMG: Layering instruments (Screenshot of the app I'm working on)

After you know the benchmarks for the next process for good balancing, the rest is determined by your hearing and abilities.

So my short article may be useful for all, especially for beginner music engineering like me.

DISCORD FACEBOOK INSTAGRAM TELEGRAM

I have previously posted this Article on the my blog