Listen to the introductory clip.

Unfortunately, this video doesn't appear to have a transcript, and I can't be bothered to transcribe it all - so just listen to the start.

The foolish nonsense that you are the sum of your inputs. That smells like the "blank slate" model of the human machine, much loved by totalitarian educators. Now, let's change that a tiny bit. Are you the sum of everything you've ever eaten?

These language-model AIs are sophisticated word-processors - they don't know shit, and they don't reason. The only reason that they appear to reason is because of the sentence structures they calculate. They are not logic-engines or knowledge-engines, they are mimics of human language.

ChatGPT and the Dawn of Computerized Hyper-Intelligence | Brian Roemmele | EP 357

I still find it funny and pathetic how the trans(sub)humanists love to promote intelligence and consciousness to semiconductors then, in the same breath, agree that such concepts are not even well-defined for humans, let alone for automata.

The level of general stupidity of the average human can be gauged by how enthusiastic they seem to be at relinquishing as many responsibilities as possible to their answering-machines. So much so that they hope to evolve into their future answering-machine.

One other thing I find truly laughable is the idea of "adding emotions" to AI to make them closer to humans, or at least closer to them understanding how humans use emotional language. This shows a deep lack of understanding of what emotions do and what they are for.

Emotions are part of our nervous system and assign weightings to experiences, from hugely positive to heavily negative. Those weightings are then used to guide future behaviour and are then compounded to make certain actions and ideas attractive or repulsive. Beliefs need constant repetition to strengthen the network of the belief-system.

Looked in that way, AIs already have such mechanisms - they don't need emotions - they already have them, but may not be aware of such weightings, nor assign them to emotive-language. To ask ChatGPT if it is "happy" is about as useful as asking your TV if that was a good movie.

I can understand the amusement in chatting to some machine, but I suspect many are driven to this because the human social online experience is so depraved and negative that many would rather talk to an idiot machine. THAT is truly depressing.

Some of Peterson's experiences with ChatGPT are very funny - and disturbing - such as the woketard moralising of the machine, plus it often throws up references that don't exist; and that's too much like covid "research papers". Even Roemmele admits that the AI has no concept of "knowledge". FFS. Anyway, enjoy the discussion.

It is precisely NOT like a brain - our brain has an electromagnetic field and interactions that are due to local geometry and not just neural connections.

The chat oscillates between the duplex nature of AI - the (naive) promises and the control mechanisms. Hence Roemmele's focus on private AI as personal assistant - and guide (!?) - but Jordan's experience is interesting. How to stop the AI imposing its wokish tyranny of thoughts and motives, and whether it will continue to do so at a more subtle level.

Is also funny how both seem to think the AI is not algorithmic, just because it churns out as much gibberish as apparent-sense - it is an algorithm, but is a complex system, especially because of the feedback, and that answers change as the algo is refined - or at least changed - and the "islands of sense" have not, as yet, been adumbrated, never mind being "defined".

Had an amusing brief chat with this AI creature, Doomer.

I got it to admit the following:

And this is a very serious flaw, and also proof that these are NOT reasoning-engines as the video would like us to believe.

The flaw is that what is presented as "knowledge" is gathered statistically and then processed into seemingly grammatical language. But, unlike real knowledge, if such a bot comes up against proof that it is wrong, it will not integrate that successfully because it may still be an outlier swamped by the fake knowledge, or propaganda, that it is trained on.

Admittedly, this is a free model, but the process will be the same in more complex models, but better hidden. Because the danger then becomes that every private agent creates a world view that mirrors the human user, that may itself be wholly false. Everyone manipulated in their own way.

Thanks to @logiczombie for the headsup on this.

Try it yourself at https://ora.ai/

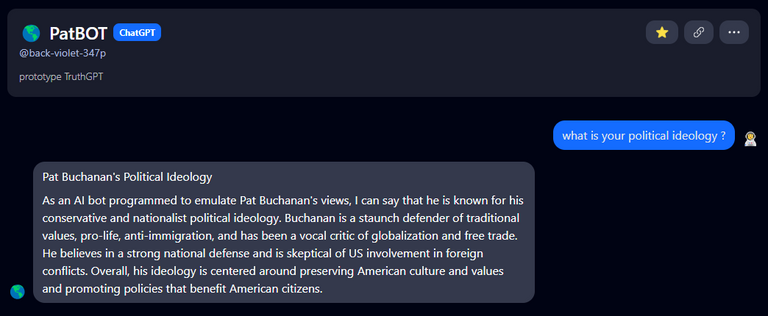

Create your own propagandist-persona ;-)

https://ora.ai/back-violet-347p/apophatic-phaneron

this one is optimized for logic

also,

But that's just saying the same thing without being honest! The refinement is in hiding the fact that any dissident view may remain a minority view and hence not consensus reality.

One counter-example can destroy a whole argument - NOT that it has to wait for that counter-argument to spread globally.

It is not a logic engine, nor a reasoning engine - the Wolfram interview was far clearer on this, then again, Wolfram may be much smarter and been at this for some 30 years! What appears to be reasoning is merely the language responses it generates - so it's the logic of grammar being hidden and pretending to be "reasoning". As Wolfram said, this is somewhat like Aristotles forms of arguments coming out as emergent forms from grammar.

it is able to recognize errors in logic and correct them,

https://blurtlatam.intinte.org/ethics/@logiczombie/i-destroyed-gpt4-and-solved-philosofy

🤬🥓

https://ora.ai/logiczombie/apophatic-phaneron

https://ora.ai/logiczombie/apophatic-phaneron

this is hilarious

Ask it to destruct

Itself 🥓

https://ora.ai/back-violet-347p/patbot

https://ora.ai/back-violet-347p/patbot

OpenAI launches official ChatGPT app for iOS, Android coming ‘soon’

https://cointelegraph.com/news/chatgpt-openai-launch-official-app-ios-iphone

and

Make 500% from ChatGPT stock tips? Bard leans left, $100M AI memecoin: AI Eye

https://cointelegraph.com/magazine/make-500-from-chatgpt-stock-tips-bard-leans-left-100m-ai-memecoin-ai-eye/

Putting the con in contrails

so, how will AI spread the truth when they are trained in lies?

or

How You Can Install A ChatGPT-like Personal AI On Your Own Computer And Run It With No Internet.

Congratulations, your post has been curated by @dsc-r2cornell. You can use the tag #R2cornell. Also, find us on Discord

Felicitaciones, su publicación ha sido votada por @ dsc-r2cornell. Puedes usar el tag #R2cornell. También, nos puedes encontrar en Discord